Assertions

Currently we support monitoring data on Snowflake, Redshift, BigQuery, and Databricks as part of DataHub Cloud Observe. DataHub Cloud Observe can still monitor assertions for other data platforms against dataset metrics (such as row count, or column nullness) and dataset freshness by using the ingested statistics.

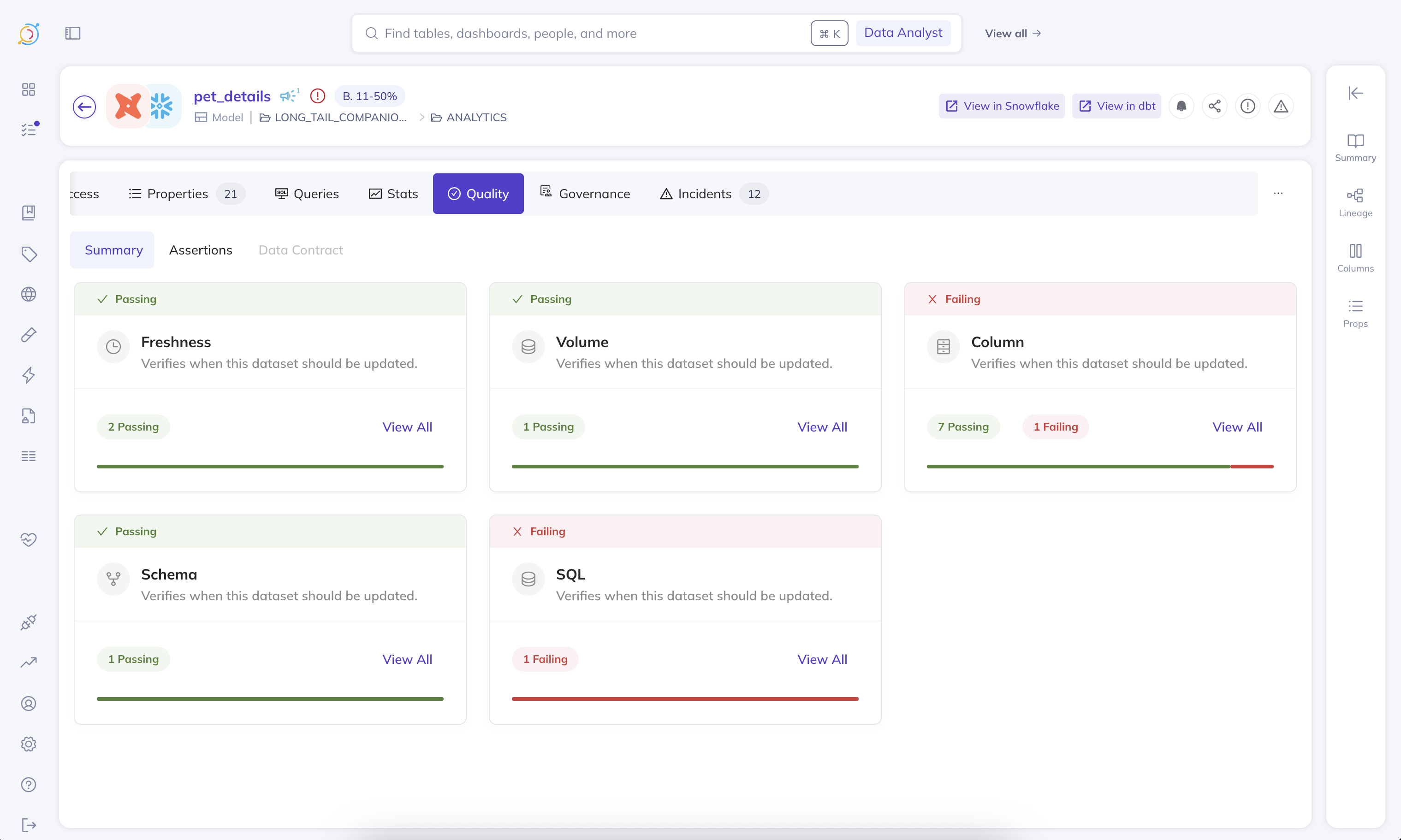

An assertion is a data quality test that finds data that violates a specified rule. Assertions serve as the building blocks of Data Contracts – this is how we verify the contract is met.

How to Create and Run Assertions

Data quality tests (a.k.a. assertions) can be created and run by DataHub Cloud or ingested from a 3rd party tool.

DataHub Cloud Assertions

For DataHub-provided assertion runners, we can deploy an agent in your environment to hit your sources and DataHub. DataHub Cloud Observe offers out-of-the-box evaluation of the following kinds of assertions:

Bulk Creating Assertions

You can bulk create Freshness and Volume Smart Assertions (AI Anomaly Monitors) across several tables at once via the Data Health Dashboard.

To bulk create column metric assertions on a given dataset, follow the steps under the Anomaly Detection section of Column Assertion.

Detecting Anomalies Across Massive Data Landscapes

There are many cases where either you do not have the time to figure out what a good rule for an assertion is, or strict rules simply do not suffice for your data validation needs. Traditional rule-based assertions can become inadequate when dealing with complex data patterns or large-scale operations.

Common Scenarios

Here are some typical situations where manual assertion rules fall short:

Seasonal data patterns - A table whose row count changes exhibit weekly seasonality may need a different set of assertions for each day of the week, making static rules impractical to maintain.

Statistical complexity across large datasets - Figuring out what the expected standard deviation is for each column can be incredibly time consuming and not feasible across hundreds of tables, especially when each table has unique characteristics.

Dynamic data environments - When data patterns evolve over time, manually updating assertion rules becomes a maintenance burden that can lead to false positives or missed anomalies.

The AI Smart Assertion Solution

In these scenarios, you may want to consider creating a Smart Assertion to let machine learning automatically detect the normal patterns in your data and alert you when anomalies occur. This approach allows for more flexible and adaptive data quality monitoring without the overhead of manual rule maintenance.

Both traditional and smart assertions can be defined through the DataHub API or the UI.

Reporting from 3rd Party tools

You can integrate 3rd party tools as follows:

If you opt for a 3rd party tool, it will be your responsibility to ensure the assertions are run based on the Data Contract spec stored in DataHub. With 3rd party runners, you can get the Assertion Change events by subscribing to our Kafka topic using the DataHub Actions Framework.

Alerts

Beyond the ability to see the results of the assertion checks (and history of the results) both on the physical asset’s page in the DataHub UI and as the result of DataHub API calls, you can also get notified via Slack messages (DMs or to a team channel) based on your subscription to an assertion run event, or when an incident is raised or resolved. In the future, we’ll also provide the ability to subscribe directly to contracts.

With DataHub Cloud Observe, you can react to the Assertion Run Event by listening to API events via AWS EventBridge (the availability and simplicity of setup of each solution dependent on your current DataHub Cloud setup – chat with your DataHub Cloud representative to learn more).

Sifting through the noise & Data Health Reporting

Sometimes alerts can get noisy, and it's hard to sift through slack notifications to figure out what's important. Sometimes you need to figure out which of the tables your team owns actually have data quality checks running on them. The Data Health Dashboard provides a birds-eye view of the health of your data landscape. You can slice and dice the data to find the exact answers you're looking for.

Cost

We provide a plethora of ways to run your assertions, aiming to allow you to use the cheapest possible means to do so and/or the most accurate means to do so, depending on your use case. For example, for Freshness (SLA) assertions, it is relatively cheap to use either their Audit Log or Information Schema as a means to run freshness checks, and we support both of those as well as Last Modified Column, High Watermark Column, and DataHub Operation (see the docs for more details).

Execution details - Where and How

There are a few ways DataHub Cloud assertions can be executed:

- Directly query the source system:

a.

Information Schematables are used by default to power cheap, fast checks on a table's freshness or row count. b.Audit logorOperation logtables can be used to granularly monitor table operations. c. The table itself can also be queried directly. This is useful for freshness checks referencinglast_updatedcolumns, row count checks targetting a subset of the data, and column value checks. We offer several optimizations to reduce query costs for these checks. - Reference DataHub metadata

a. Operations that are reported via ingestion or our SDKs can power monitoring table freshness.

b.

DatasetProfileandSchemaFieldProfileingested or reported via SDKs can power monitoring table metrics and column metrics.

Privacy: Execute In-Network, avoid exposing data externally

As a part of DataHub Cloud, we offer a Remote Executor deployment model. If this model is used, assertions will execute within your network, and only the results will be sent back to DataHub Cloud. Neither your actual credentials, nor your source data will leave your network.

Source system selection

Assertions will execute queries using the same source system that was used to initially ingest the table.

There are some scenarios where customers may have multiple ingestion sources for, i.e. a BigQuery table. In this case, by default the executor will take the ingestion source that was used to ingest the table's DatasetProperties. This behavior can be modified by your customer success rep.